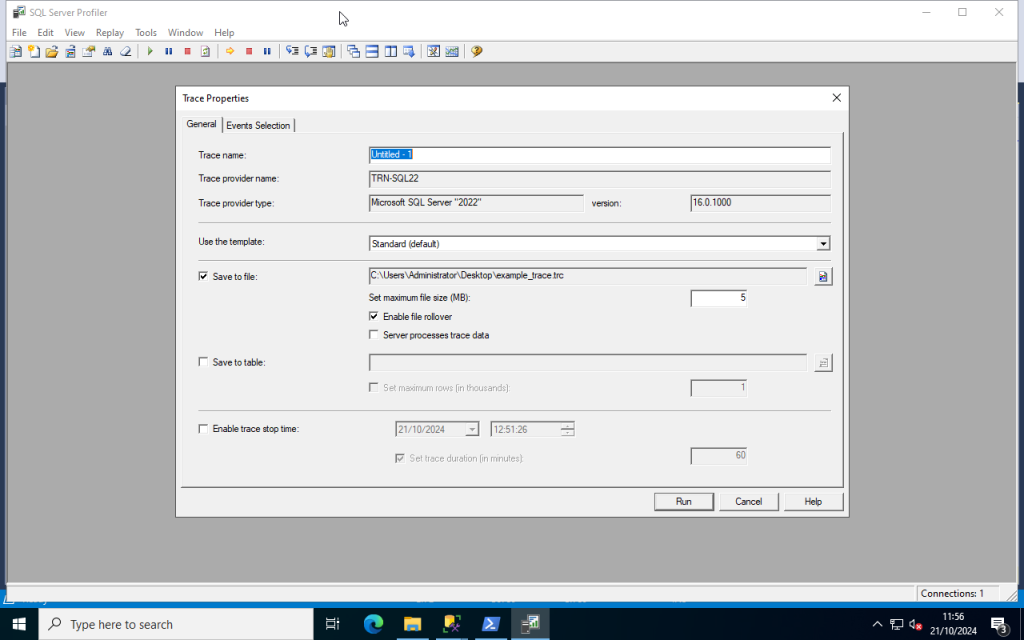

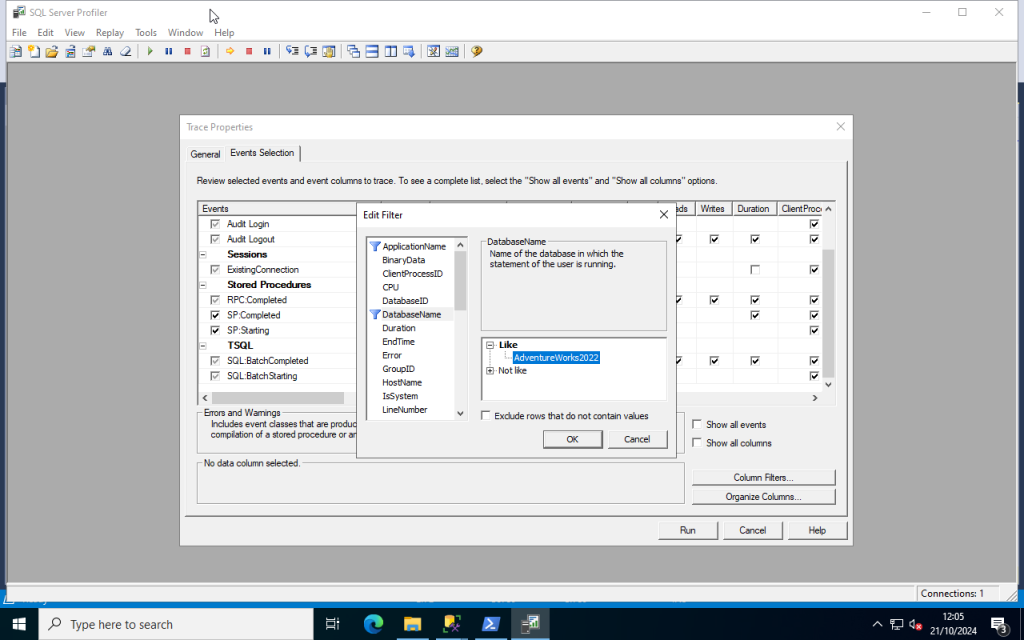

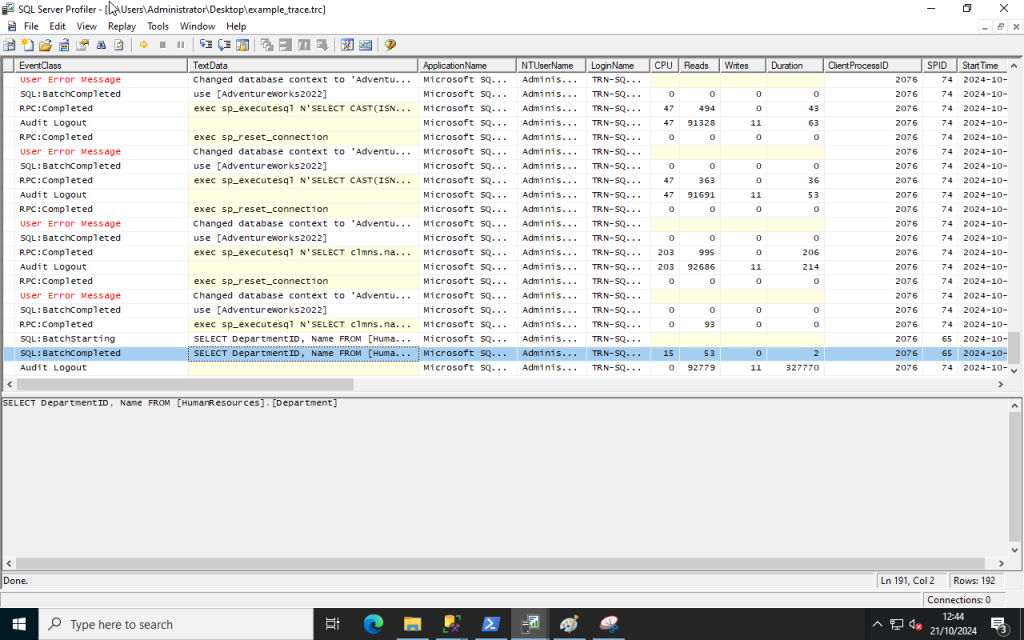

I’ve previously written about SQL Server Profiler and its use in diagnosing problematic T-SQL queries, stored procedures, and application behaviours. Although the SQL Server Profiler is very much the trusted tool for doing so it’s actually marked as deprecated by Microsoft. This unfortunately means that it will be removed in a future version of SQL Server.

In place of Profiler there is another feature called Extended Events (also referred to as XEvents or XEs). As Microsoft’s intended replacement for the Profiler it’s important to learn how this feature works in anticipation for a future release of SQL Server where Profiler is no longer available.

A key advantage of Extended Events is that they are less performance intensive than what SQL Server Profiler is and that they are designed to be left running to collect data. You’ll also notice that Extended Event sessions are all done directly in SSMS rather than launching a separate tool. As sessions are stored as objects within the SQL Server instance other DBAs can also make use of them.

Let’s work through a simple Extended Event session that will capture SQL queries being made in an AdventureWorks2022 test database.

Note: the following example was done on SQL Server 2022 CU17 running on Windows Server 2022 update 2025-01. SQL Server Management Studio 20.2 was used

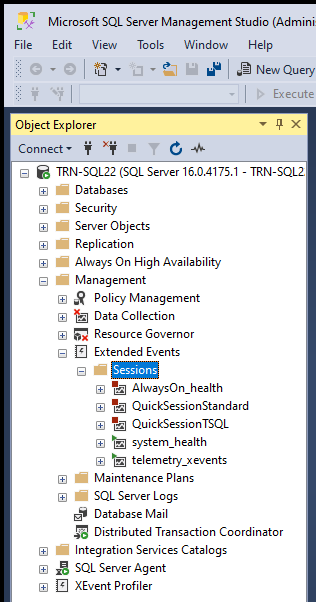

Step 1: Launch SSMS from the start menu, login to the required SQL Server instance. Once you are logged in goto Instance Name > Management > Extended Events

Step 2: Create a session by right clicking the Sessions folder and clicking New Session Wizard.

Step 3: Click Next to skip past the introduction.

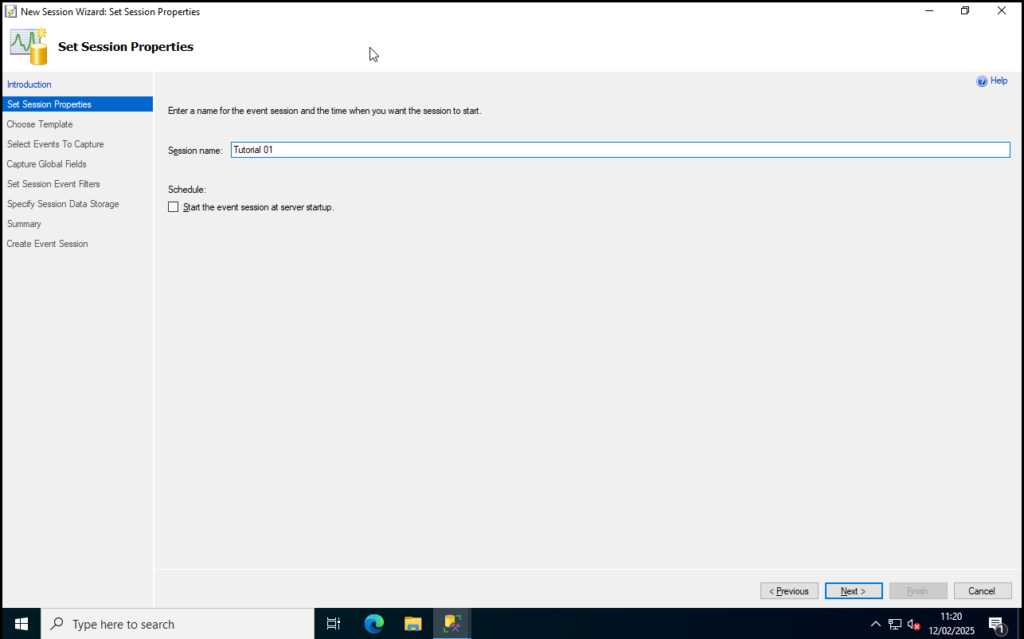

Step 4: Give your XEvent Session a descriptive name. For this example I’ve just used Tutorial 01. Click Next to proceed.

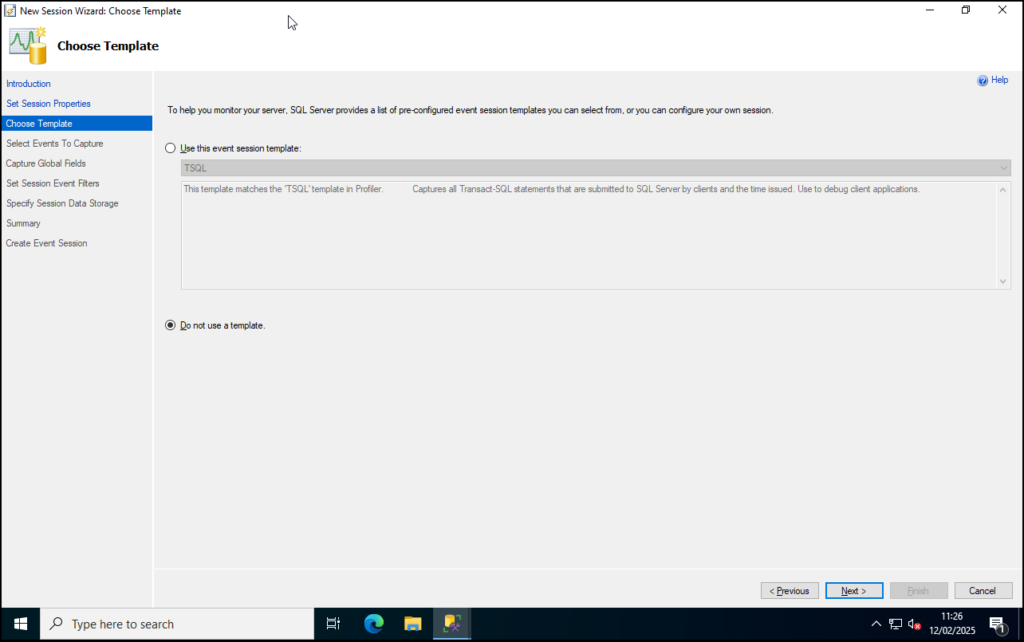

Step 5: Although there are some templates to choose from select Do not use a template and click Next.

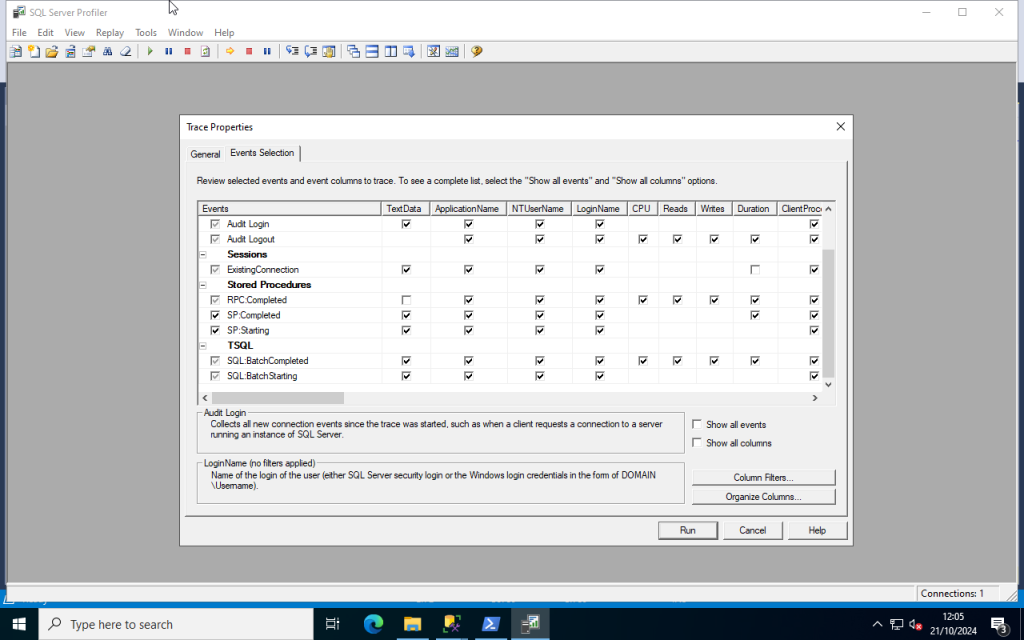

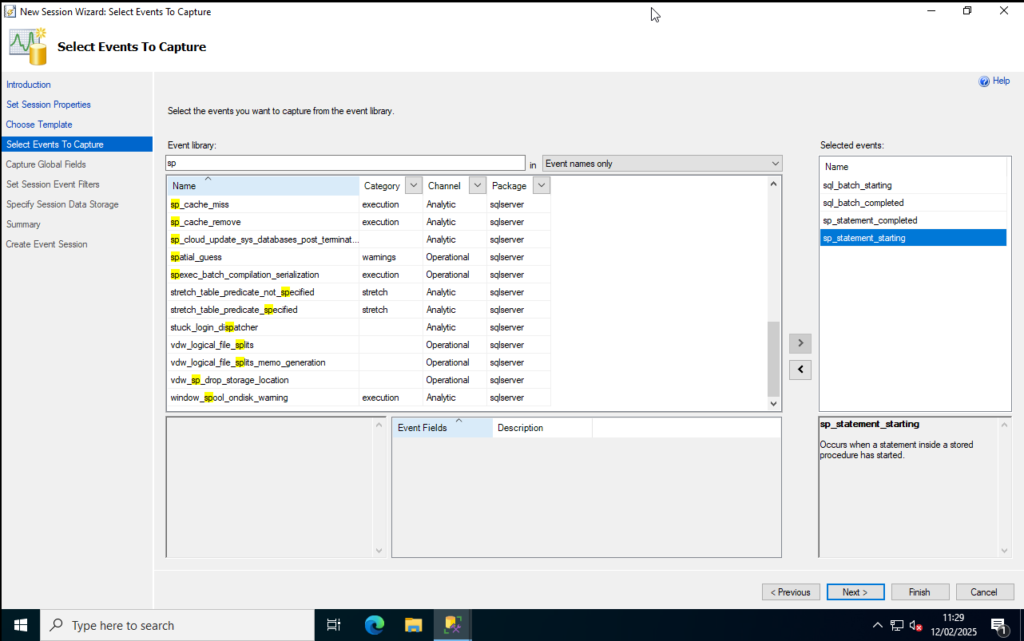

Step 6: This page now allows you to select events you want to track. Search for the following events and then double click each line to add to the list to the right. Click Next once you have them all:

- sql_batch_starting

- sql_batch_completed

- sp_statement_starting

- sp_statement_completed

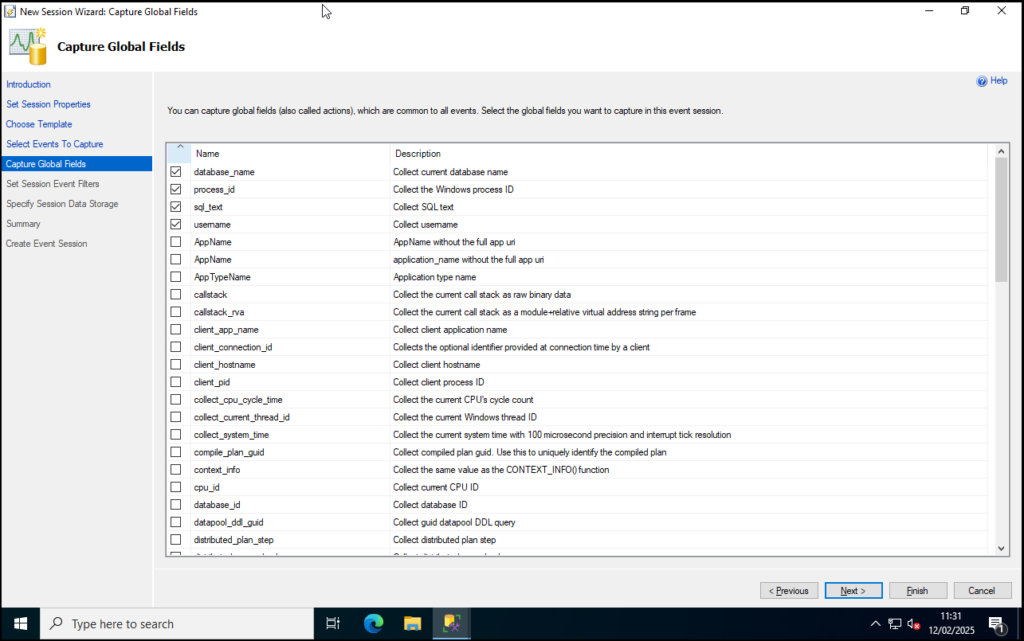

Step 7: In the list of global events to track let’s select the following and then click Next:

- database_name

- process_id

- sql_text

- username

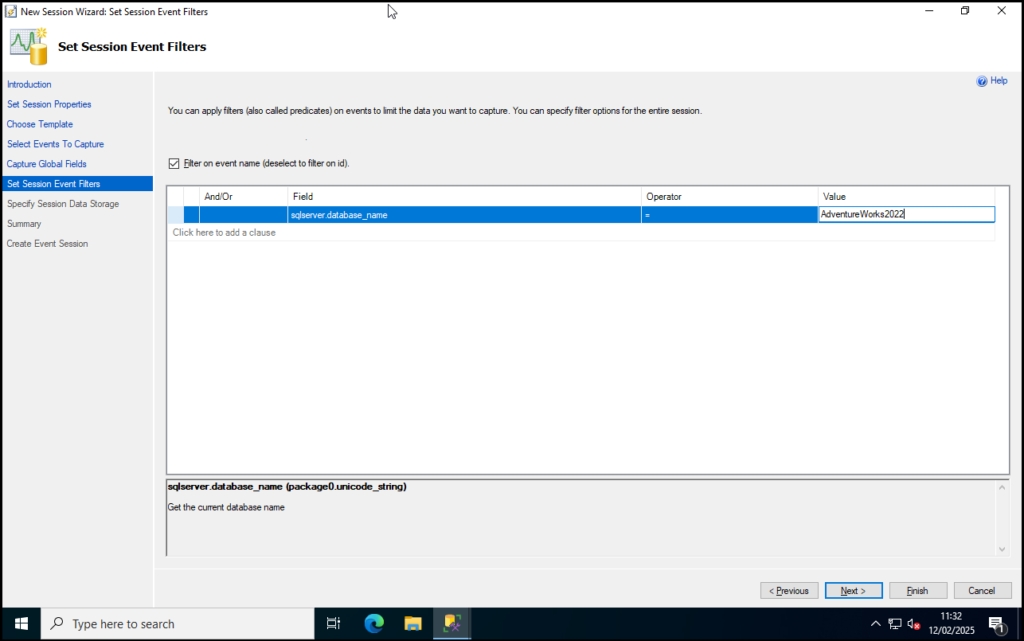

Step 8: For filters I’m going to add in a line to narrow down the XEvent to AdventureWorks2022 using the filter sqlserver.database_name. Click Next once set:

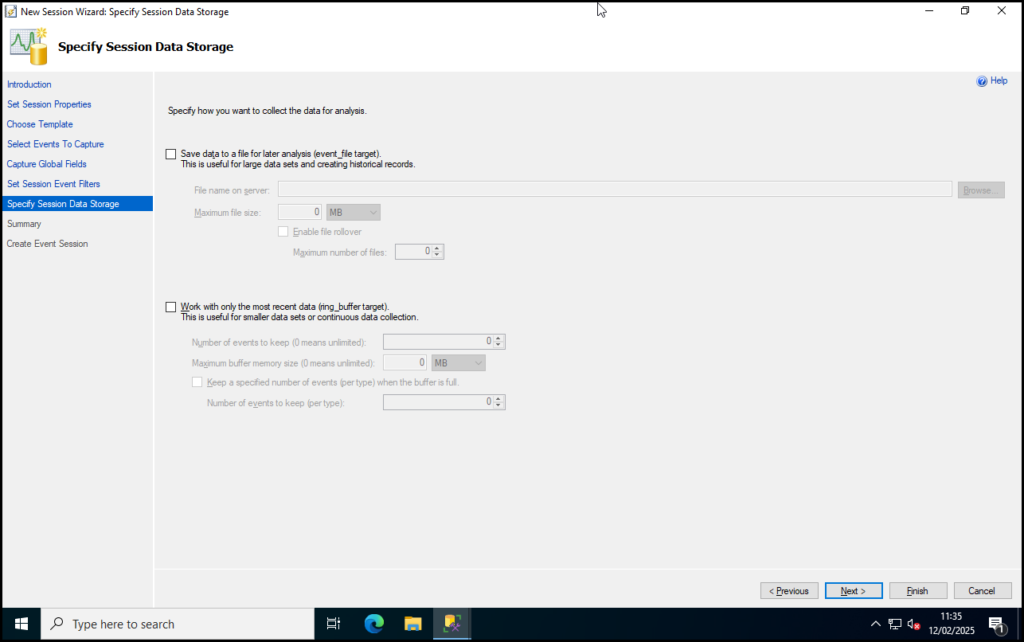

Step 9: Leave the data storage options unchecked for now. We’re only going to demo this XEvent so we aren’t interested in keeping the data. Click Next.

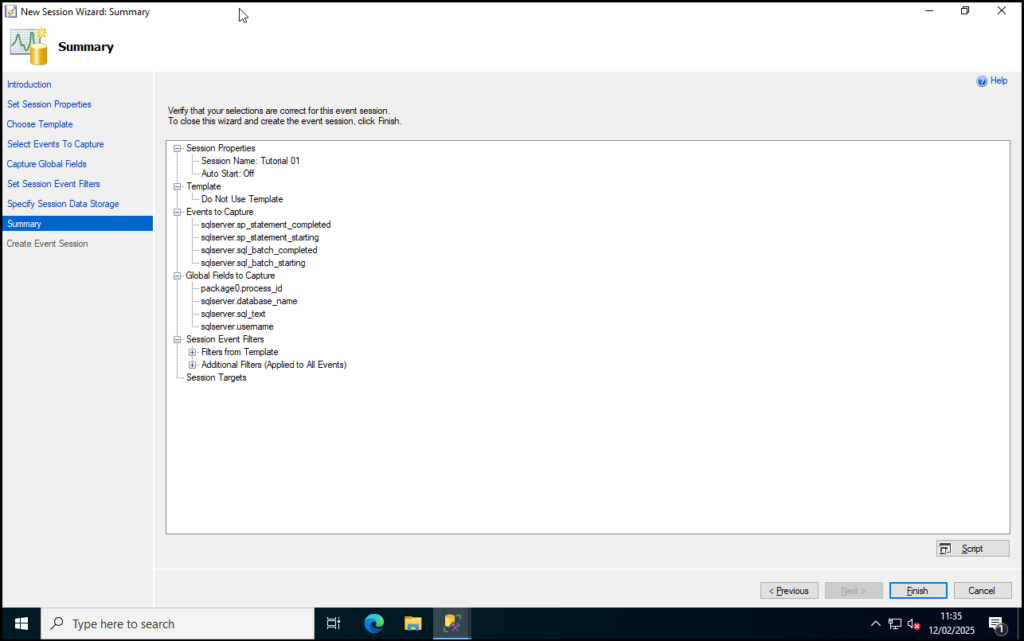

Step 10: Review the summary and click Finish when you are satisfied with your selections.

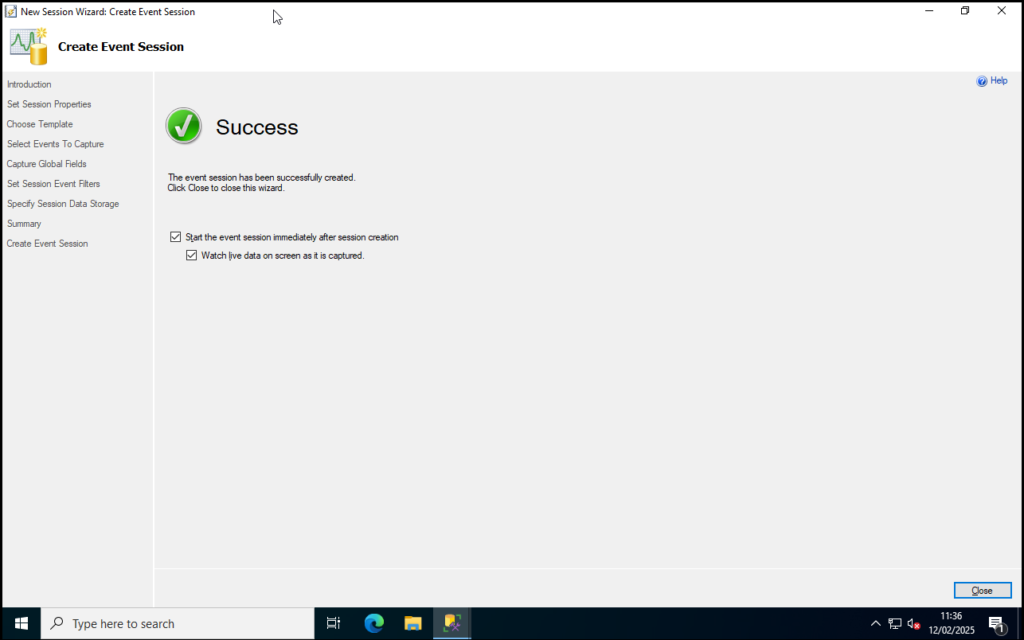

Step 11: As we’d like to make use of this XEvent straight away check both options on the Summary screen then click Close.

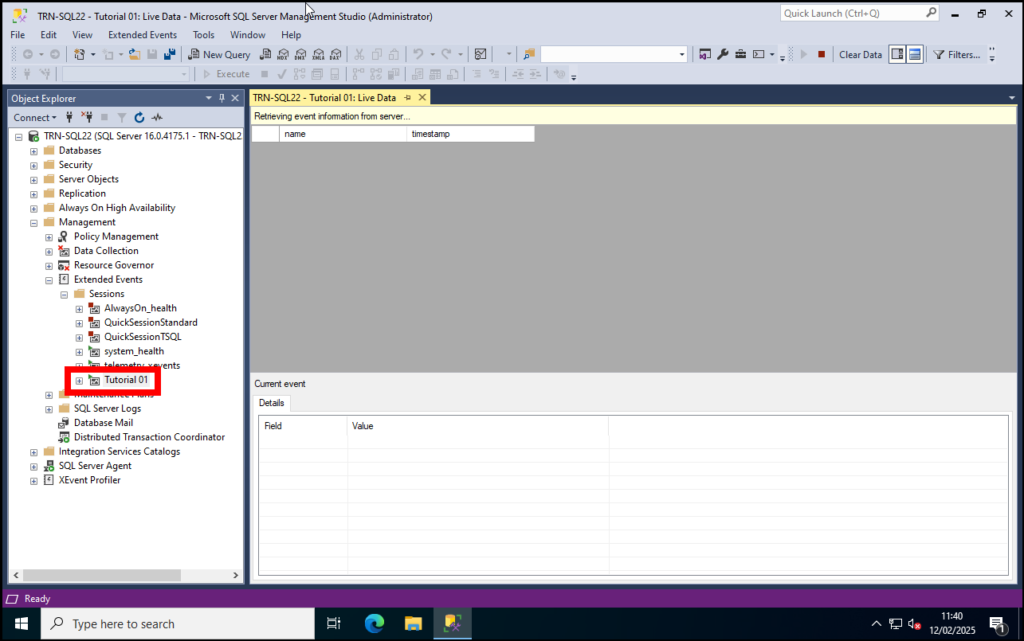

You will then go back into SSMS. Note that the event Tutorial 01 now appears under Instance Name > Management > Extended Events > Sessions in the object explorer and also that a new window for the session events is open.

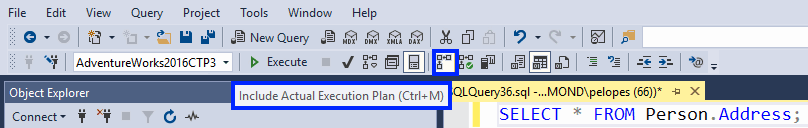

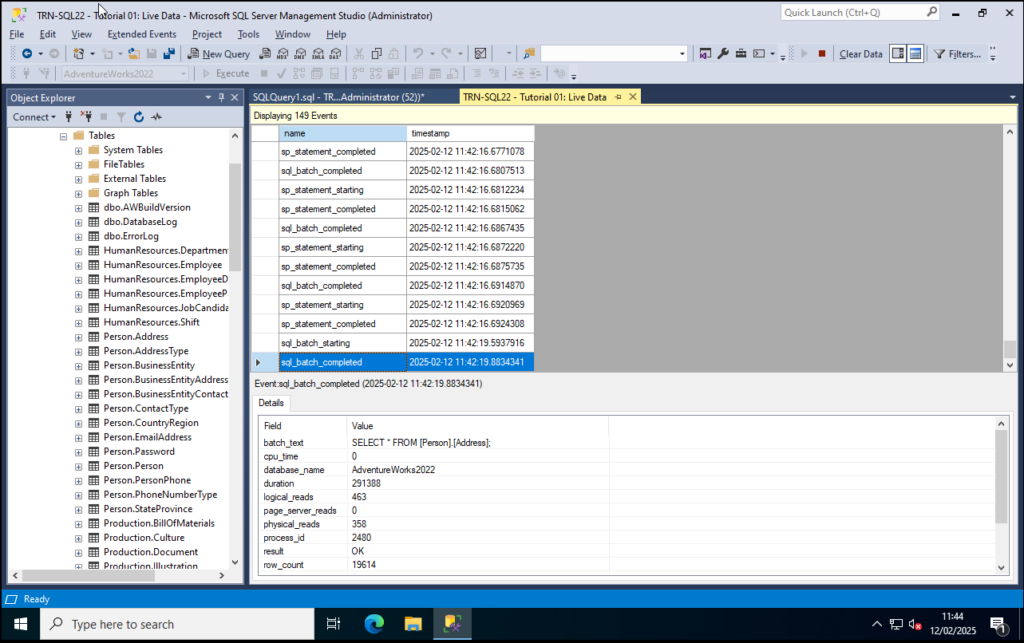

At this point make a simple select query on AdventureWorks2022. I did a lazy one just for demo:

SELECT * FROM [Person].[Address]You’ll now see events tracking in the session event window:

What you’ve just done is a simple session event trace but you can use the steps above to further customise your XEvent to capture the information you require.

Conclusion

We’ve discussed what SQL Server Extended Events are, what the advantages are, and performed a tutorial to build a very basic XEvent on a demo database.

If you organisation needs assistance with SQL Server we are here to help. Digital Incite and Matter Ltd’s consultants are SQL Server trained, certified and experienced with a wide variety of business applications. Get in touch with us today to discuss your requirements.